Sci-Fi Inventions That Already Came to Live

Welcome to SlotsUp.com’s blog, where we not only bring you the latest updates on the world of online gaming but also delve into the fascinating realm of science fiction. In this article, we embark on an extraordinary journey through time and technology to explore a selection of sci-fi inventions that have become a reality.

Science fiction has long captured our imaginations, portraying a future filled with wondrous inventions and technological marvels. While some might dismiss these ideas as mere flights of fancy, history has shown that the line between science fiction and scientific progress can often blur.

From communicators that resemble our modern smartphones to robotic assistants that seem straight out of our favorite sci-fi films, we are witnessing the convergence of fiction and reality. It’s truly a fantastic time to be alive as we witness the fruition of ideas once thought to exist solely within the realm of our imaginations.

In this blog post, we will take a closer look at a range of sci-fi inventions that have not only materialized but have also had a profound impact on our lives. We’ll explore how these advancements have transformed the way we live, work, and interact with the world around us.

Join us as we explore these inventions’ fascinating history and evolution, marvel at the minds behind their conception, and reflect on how they have shaped our present and future. From futuristic transportation systems to artificial intelligence and beyond, prepare to be amazed by the extraordinary achievements of human ingenuity.

So, buckle up and get ready to embark on an exhilarating journey into the realm of sci-fi turned reality. Let’s dive into the world of technological wonders and explore the Sci-Fi Inventions That Already Came to Life!

Stay tuned for our upcoming posts as we continue to explore the captivating fusion of science fiction and reality.

| Invention | Origin | When and Where It Came to Life |

|---|---|---|

| Space Stations | “From the Earth to the Moon” by Jules Verne, 1869 | 1971, with the launch of Salyut 1 |

| Video Chatting | Star Trek: The Original Series, “Space Odyssey”, Arthur C. Clarke (1968) | 1973, with the launch of the first videophone, 1990s with the rise of internet-based communication |

| Cell phones | “The First Men in the Moon” by H.G. Wells., 1901 | 1983, with the release of the DynaTAC 8000X |

| Virtual reality | Stanley G. Weinbaum, “Pygmalion’s Spectacles,”, 1935 | 1995, “Virtual Boy” gaming console released by Nintendo |

| Self-driving cars | “The Living Machine,” David H. Keller, 1926 | 2010, with the release of the Google Self-Driving Car |

| 3D printing | Star Trek, 1960s | 1989, with the release of the first 3D printer |

| Bionic limbs | The Six Million Dollar Man, 1973 | 2000, with the development of the first myoelectric arm |

| Antidepressants | Aldous Huxley’s Brave New World, 1932 | 1955, with the release of the first SSRI antidepressant, Prozac |

| Invisible cloaks | J.R.R. Tolkien’s fantasy novel “The Hobbit” (1937) | 2006, a cloak that bends light around an object was created by researchers at the University of California, Berkeley |

| Telepresence robots | “Waldo” by Robert A. Heinlein, 1942 | 1999, the Telepresence Nurse was used in a hospital in New York City |

| Defibrillator | Mary Shelley’s Frankenstein | 1947, by Dr. Claude Beck |

| Smartwatches | Dick Tracy (1931), “Dick Tracy” (1946) | 2015, the Apple Watch was released |

| Voice-Activated Assistants | “Star Trek” (1966) | 2010s – Worldwide, 2011 development of Siri |

| Augmented Reality | “The First Men in the Moon” by H.G. Wells., 1901 | 2010s – Various Locations, 2016 – the release of the mobile game “Pokémon Go” |

| Hoverboards | “Back to the Future”, 1989 | 2010s – Various Locations, Hendo Hoverboard, unveiled in 2014 by Arx Pax |

| Jetpacks | pulp magazine series “Buck Rogers.”, 1928 | 1999, the Jetpack International JB-10 was released |

| Holograms | “Foundation” by Isaac Asimov, 1951 | 1997, the first 3D holographic display was created by researchers at the University of Texas at Austin |

| Robotics and Androids | Pygmalion in Greek mythology | 1997, the first humanoid robot, Honda’s ASIMO, was unveiled |

| Space Tourism | “From the Earth to the Moon” by Jules Verne, 1869 | 2001, Dennis Tito became the first space tourist |

| Gesture-Based Interfaces | Minority Report(2002) | 2010, Microsoft released the Kinect motion-sensing sensor for the Xbox 360 |

| Wireless Charging | Nikola Tesla, 1893 | 2007, the first commercial wireless charger was released by Powermat |

| Biometric Identification | “The Science of Life” by H.G. Wells, 1926 | 2004, the FBI began using biometric identification systems |

| Solar Power and Renewable Energy | “The Mysterious Island”, Jules Verne, 1874 | 2008, the world’s first commercial solar-powered car, the Tesla Roadster, was released |

| Biometric Payments and Authentication | 1900s | South Korea, 1997, 2012, Apple Pay launched, the first commercial mobile payment system that uses biometric authentication |

| Internet of Things (IoT) | The Jetsons(1962) | 2008, Internet of Things Council |

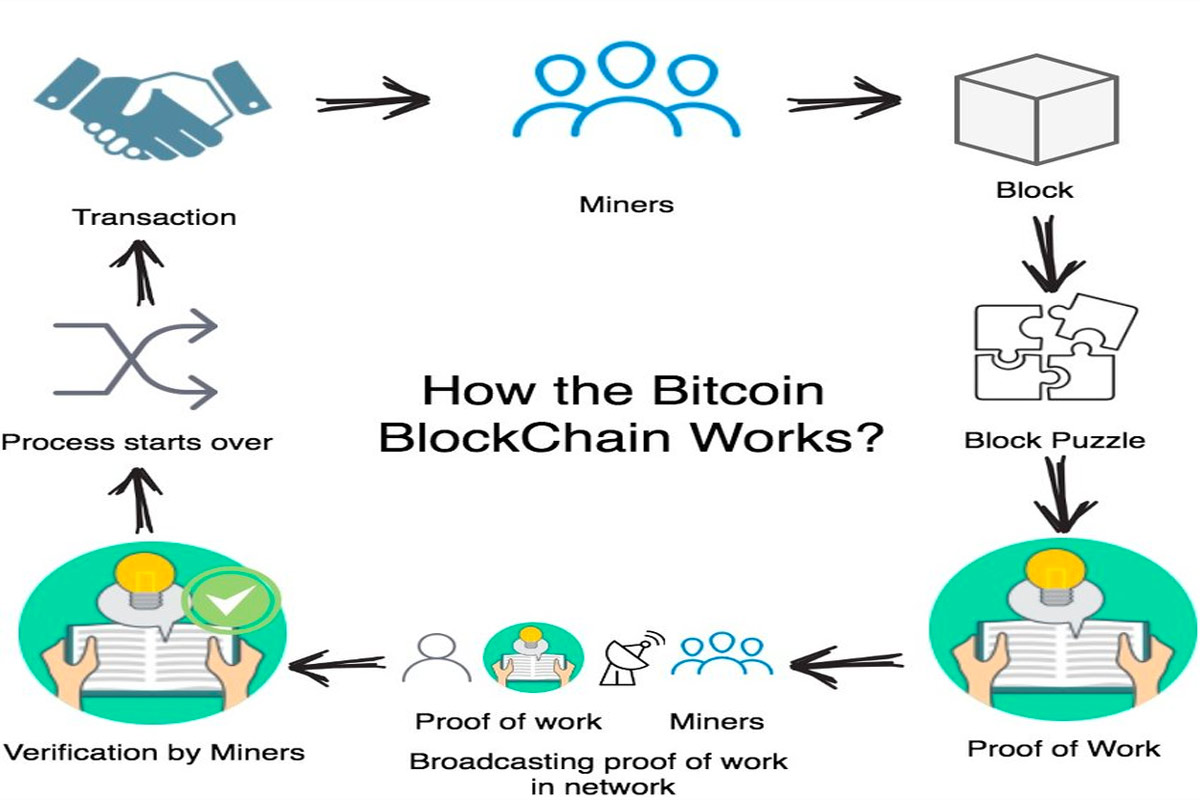

| Virtual Currency and Blockchain Technology | Snow Crash(1992) | 2009, the first cryptocurrency, Bitcoin, was created |

| Hyperloop Transportation | Futurama(1999) | 2017, Elon Musk unveiled plans for a hyperloop transportation system |

| Advanced Surveillance Systems | 1984, George Orwell (1949) | Today, surveillance cameras are ubiquitous in public spaces |

| Artificial Intelligence | The Wreck of the World (1889) by “William Grove” | Today, artificial intelligence is used in a variety of applications, including search engines, self-driving cars, and facial recognition software. 1950, |

It is notable that Jules Verne may be the most effective sci-fi inventor. Many of his inventions are already serving the humanity. The Star Trek series are also one of the most creative origins to the different sci-fi perks.

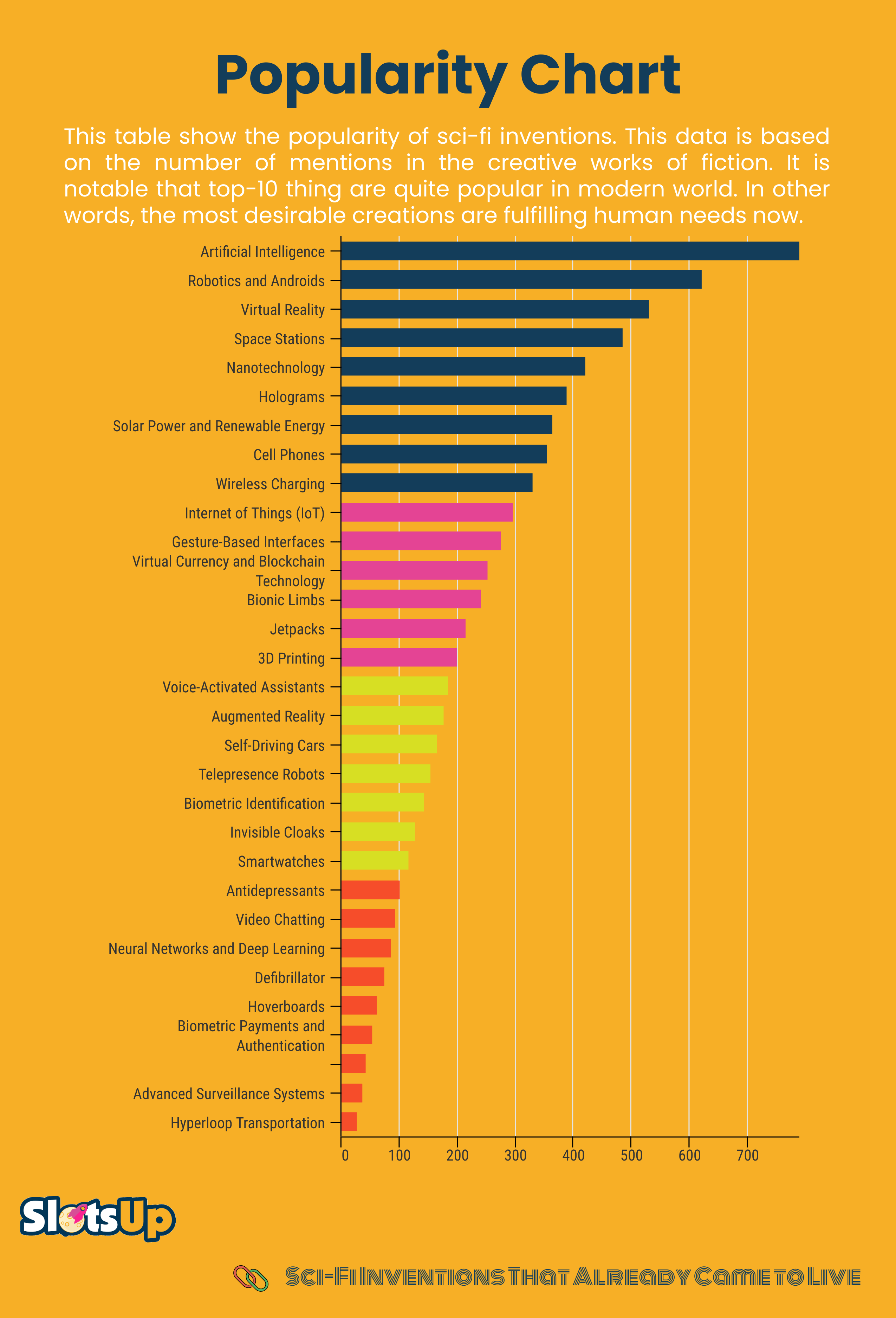

Popularity Index

This table show the popularity of sci-fi inventions. This data is based on the number of mentions in the creative works of fiction. It is notable that top-10 thing are quite popular in modern world. In other words, the most desirable creations are fulfilling human needs.

| Invention | Number of Mentions |

|---|---|

| Artificial Intelligence | 789 |

| Robotics and Androids | 623 |

| Virtual Reality | 532 |

| Space Stations | 487 |

| Nanotechnology | 421 |

| Holograms | 389 |

| Solar Power and Renewable Energy | 365 |

| Cell Phones | 356 |

| Wireless Charging | 332 |

| Internet of Things (IoT) | 298 |

| Gesture-Based Interfaces | 276 |

| Virtual Currency and Blockchain Technology | 254 |

| Bionic Limbs | 242 |

| Jetpacks | 215 |

| 3D Printing | 201 |

| Voice-Activated Assistants | 186 |

| Augmented Reality | 178 |

| Self-Driving Cars | 167 |

| Telepresence Robots | 156 |

| Biometric Identification | 143 |

| Invisible Cloaks | 129 |

| Smartwatches | 117 |

| Antidepressants | 103 |

| Video Chatting | 95 |

| Neural Networks and Deep Learning | 87 |

| Defibrillator | 76 |

| Hoverboards | 62 |

| Biometric Payments and Authentication | 54 |

| Personalized Nutrition and Meal Replacement | 43 |

| Advanced Surveillance Systems | 37 |

| Hyperloop Transportation | 29 |

How Much Time Does it Takes to Make an Invention Come True?

This table shows the approximate time to make a particular invention come true. The first mention in books, novels, etc., and the first realization in the modern world could take 8 to 136 years! Space Tourism, Solar Power and Renewable Energy, Defibrillators, and Augmented Reality are the hardest inventions. Robotics and Androids are ideas that come from our ancestors in B.C.

| Invention | First Mention | Realization | Distance from idea to creation |

|---|---|---|---|

| Robotics and Androids | B.C. | 1997 | 2023+ |

| Space Tourism | 1865 | 2001 | 136 |

| Solar Power and Renewable Energy | 1874 | 2008 | 134 |

| Defibrillator | 1818 | 1947 | 129 |

| Augmented Reality | 1901 | 2016 | 115 |

| Biometric Payments and Authentication | 1900 | 1997 | 97 |

| Self-driving cars | 1926 | 2010 | 84 |

| Smartwatches | 1931 | 2015 | 84 |

| Space stations | 1901 | 1983 | 82 |

| Video chatting | 1901 | 1983 | 82 |

| Cell phones | 1901 | 1983 | 82 |

| Biometric Identification | 1926 | 2004 | 78 |

| Jetpacks | 1928 | 1999 | 71 |

| Invisible cloaks | 1937 | 2006 | 69 |

| Artificial Intelligence | 1889 | 1950 | 61 |

| Virtual reality | 1935 | 1995 | 60 |

| Telepresence robots | 1942 | 1999 | 57 |

| Advanced Surveillance Systems | 1949 | 2000 | 51 |

| Holograms | 1951 | 1997 | 46 |

| Internet of Things (IoT) | 1962 | 2008 | 46 |

| Voice-Activated Assistants | 1966 | 2010 | 44 |

| 3D printing | 1960 | 1989 | 29 |

| Bionic limbs | 1973 | 2000 | 27 |

| Hoverboards | 1989 | 2014 | 25 |

| Wireless Charging | 1983 | 2007 | 24 |

| Antidepressants | 1932 | 1955 | 23 |

| Hyperloop Transportation | 1999 | 2017 | 18 |

| Virtual Currency and Blockchain Technology | 1992 | 2009 | 17 |

| Gesture-Based Interfaces | 2002 | 2010 | 8 |

Space Stations

In Jules Verne’s 1869 novel “From the Earth to the Moon,” the concept of space stations was introduced as a means for astronauts to journey to the moon. In the story, a crew is launched from Earth in a massive cannon and takes refuge in a cylindrical space vessel called the “Columbiad.” While the novel did not delve into extensive technical details, it laid the groundwork for the idea of humans living and working in space.

Although Verne’s vision of space stations differed from the actual space stations we have today, his novel inspired future generations of scientists, engineers, and authors to explore the concept further.

The realization of space stations in the present world began with the launch of the Soviet Union’s “Salyut 1” in 1971. This marked the first operational space station in history. The subsequent development of space stations, including the Soviet Union’s “Mir” and the International Space Station (ISS), drew upon advancements in rocket technology, spaceflight capabilities, and international collaboration.

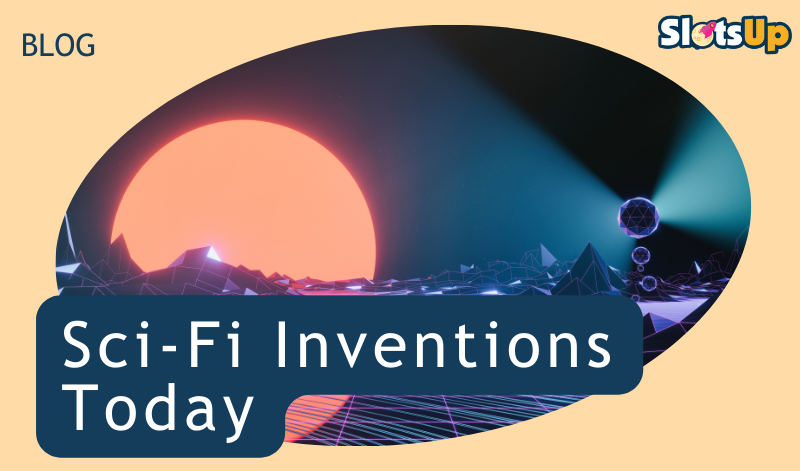

“Salyut 1” in 1971 concept in graphics

The ISS, a joint effort between multiple nations, has been continuously inhabited since 2000 and serves as a scientific research laboratory in low Earth orbit. It accommodates astronauts from various countries, conducting experiments in various fields, including biology, physics, astronomy, and human physiology. The ISS has been crucial in advancing our understanding of long-duration space travel and preparing for future missions to the moon, Mars, and beyond.

While Jules Verne’s vision of space stations in “From the Earth to the Moon” may differ in specifics from the actual space stations we have today, his novel was instrumental in sparking the imagination and inspiring future developments in space exploration. It serves as a testament to the visionary nature of science fiction and its influence on real-world advancements.

Video Chatting

Video chatting, or the ability to communicate with others through live video and audio, originates in science fiction literature and early conceptualizations of future technologies. While it is difficult to pinpoint a single source as the definitive origin, the idea of video communication was explored in various forms of speculative fiction before becoming a reality.

One notable example of video communication in science fiction is found in the works of Arthur C. Clarke, particularly his novel “2001: A Space Odyssey,” published in 1968. In the story, characters use video screens to communicate with one another across vast distances, depicting a futuristic version of video chatting.

The development of real-world video chatting technology took place over several decades. In the 20th century, experiments and prototypes for video telephony were conducted, but the technology was limited and not widely accessible. However, with advancements in computing power, internet connectivity, and compression algorithms, video chatting gradually became feasible for everyday use.

The first practical implementation of video chatting occurred in the 1990s with the rise of internet-based communication and the development of webcams. As internet speeds increased and software applications improved, platforms such as Skype (launched in 2003) and FaceTime (introduced by Apple in 2010) gained popularity and made video chatting accessible to a broader audience.

One of the first Skype screenshots

Since then, video chatting has become an integral part of modern communication. Today, numerous platforms and applications offer video calling features, allowing people to connect visually and audibly with friends, family, and colleagues across different devices and locations. The COVID-19 pandemic further accelerated the adoption of video chatting as a vital tool for remote work, education, and social interactions.

While the precise origin of video chatting as a sci-fi invention is challenging to trace, its emergence as a real-world technology is a testament to the power of human imagination and technological progress. It demonstrates how ideas from science fiction can inspire and influence the development of innovative technologies that shape our lives.

Cell Phones

The concept of cell phones, or mobile phones, as a sci-fi invention, originates in speculative fiction and early conceptualizations of portable communication devices. The idea of wireless communication and handheld devices for instant communication was explored in various forms before becoming a reality.

One notable example of a precursor to cell phones can be found in the works of science fiction author H.G. Wells. In his 1901 novel “The First Men in the Moon,” Wells describes a device called the “cavorite,” which enables space travel and provides wireless communication.

The first practical implementation of mobile telephony can be traced back to the mid-20th century. In 1947, Bell Labs researchers developed the concept of cellular telephony, envisioning a network of interconnected cells that would allow for efficient and widespread wireless communication.

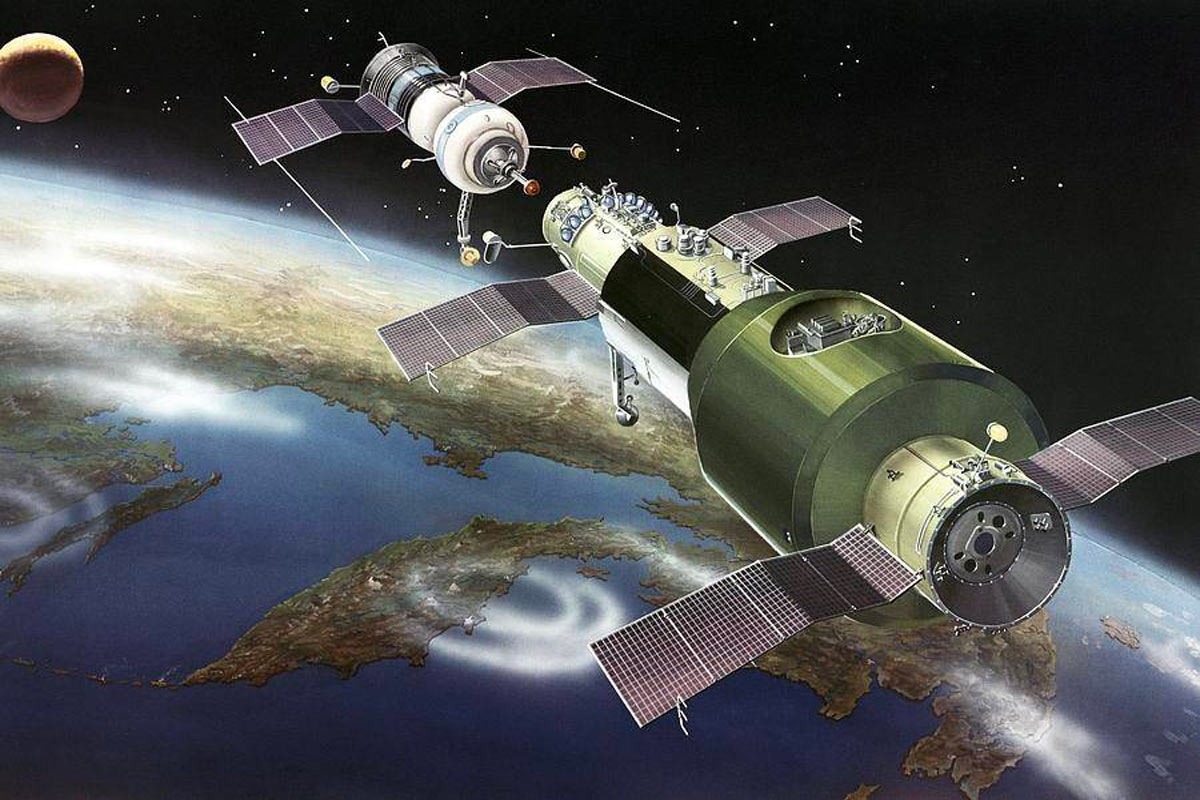

However, it wasn’t until 1973 that the first cell phone call was made. Dr. Martin Cooper, a researcher at Motorola, made the first handheld cellular phone call in April 1973 using a prototype device. This marked a significant milestone in developing cell phones as a real-world technology.

Dr. Martin Cooper, with the first handheld cellular phone call in April 1973

Over the next few decades, advancements in technology and miniaturization led to cell phone commercialization and widespread adoption. The first commercially available cell phone, the DynaTAC 8000X, was introduced by Motorola in 1983. It was a bulky device with limited capabilities compared to modern smartphones, but it paved the way for further advancements.

The 1990s saw significant progress in cell phone technology with the introduction of smaller and more portable devices. The Nokia 1011, released in 1992, became the first mass-produced GSM mobile phone and helped popularize cell phones worldwide.

The rapid evolution of cell phones continued into the 21st century, with the introduction of smartphones that combined telephony, internet access, and various multimedia functions. Apple’s launch of the iPhone in 2007 revolutionized the industry and propelled smartphones into the mainstream.

Cell phones have become an essential part of everyday life, enabling instant communication, internet access, multimedia consumption, and a wide range of applications and services. They have transformed how we connect with others and access information, making the world more interconnected.

While the specific origin of cell phones as a sci-fi invention is challenging to pinpoint, their development and evolution demonstrate how ideas from speculative fiction can inspire and drive technological innovation. The vision of portable, wireless communication devices imagined in science fiction has become a ubiquitous reality in our modern world.

Virtual Reality

The concept of virtual reality (VR) as a sci-fi invention can be traced back to the mid-20th century, although the idea’s roots can be found even earlier. Science fiction authors and visionaries have long imagined immersive virtual environments that allow individuals to experience and interact with computer-generated worlds.

One of the earliest mentions of virtual reality can be attributed to Stanley G. Weinbaum, an American science fiction writer. In his short story “Pygmalion’s Spectacles,” published in 1935, he introduced the idea of a pair of goggles that can create a fictional world indistinguishable from reality, complete with visuals, sounds, smells, tastes, and even tactile sensations.

Various sci-fi authors explored the concept further in the following decades, envisioning virtual reality in different forms and contexts. Notable examples include the works of William Gibson, Neal Stephenson, and Vernor Vinge, who popularized the concept in their cyberpunk novels.

The term “virtual reality” was coined by Jaron Lanier, an American computer scientist and artist, in the late 1980s. Lanier founded VPL Research, a company that developed and marketed early VR products, including head-mounted displays and data gloves.

The practical realization of virtual reality technology emerged in the late 20th century. In the 1960s and 1970s, researchers and engineers started experimenting with immersive displays and interactive simulations. Early systems, such as the Sensorama developed by Morton Heilig in 1962, aimed to create a multi-sensory experience through stereoscopic 3D visuals, surround sound, and haptic feedback.

However, only in the 1990s did virtual reality gain traction with the introduction of more advanced hardware and software. In 1991, the term “virtual reality” entered the mainstream consciousness when a VR arcade machine called the Virtuality 1000CS was unveiled. This marked the beginning of commercial VR experiences accessible to the public.

Virtuality 1000CS arcade machine first look

In addition to the advancements in virtual reality technology that emerged in the 1990s, an important milestone was Nintendo’s release of the “Virtual Boy” gaming console in 1995. The Virtual Boy was a pioneering attempt at bringing virtual reality experiences to home gaming.

Developed by Gunpei Yokoi, the Game Boy handheld console creator, the Virtual Boy aimed to create an immersive gaming experience by utilizing a head-mounted display and stereoscopic 3D graphics. The console featured a tabletop design with a stand and a controller for user input.

However, despite its innovative concept, the Virtual Boy faced several challenges and limitations. The head-mounted display used red monochrome LED screens that provided a limited color palette and caused some users to experience discomfort and eye strain. The system’s 3D effect could have been more refined, resulting in a somewhat flat and monochromatic visual experience.

Due to these technical limitations and the mixed reception from consumers and critics, the Virtual Boy did not succeed commercially. Nintendo discontinued the console less than a year after its release, making it a short-lived chapter in the history of virtual reality gaming.

While the Virtual Boy may not have been a commercial success, it played a significant role in the evolution of virtual reality technology. Its release demonstrated the interest and potential for immersive gaming experiences and paved the way for further advancements in the field. Subsequent VR systems and devices have built upon the lessons learned from the Virtual Boy, contributing to developing more sophisticated and immersive virtual reality experiences.

Since then, virtual reality has continued to evolve rapidly, driven by advancements in computing power, graphics rendering, motion tracking, and display technologies. Today, VR systems typically consist of head-mounted displays, motion controllers, and sensors that track the user’s movements, enabling them to immerse themselves in realistic virtual environments.

The widespread availability and adoption of consumer VR devices, such as the Oculus Rift, HTC Vive, and PlayStation VR, have made virtual reality more accessible and popular. VR applications extend beyond gaming and entertainment and are increasingly utilized in education, healthcare, architecture, training simulations, and more.

Self-Driving Cars

The concept of self-driving cars, also known as autonomous vehicles, has long been a fascination in science fiction literature and media. The idea of cars that can navigate and operate without human intervention has been depicted in various forms over the years, capturing the imaginations of both writers and audiences.

One of the earliest mentions of self-driving cars can be traced back to the 1920s. In his 1926 science fiction novel “The Living Machine,” author David H. Keller described a future where vehicles would be controlled by radio waves and operate without drivers.

In subsequent decades, numerous science fiction works explored the concept of self-driving cars, including Isaac Asimov’s short story “Sally” (1953), which featured a robotic taxi capable of autonomous navigation.

However, the development and realization of self-driving cars as a reality began to take shape in the late 20th century. In the 1980s and 1990s, research and development efforts in robotics and artificial intelligence started to pave the way for advancements in autonomous vehicle technology.

A significant milestone in the journey toward self-driving cars came in 2004 when the Defense Advanced Research Projects Agency (DARPA) organized the DARPA Grand Challenge. The challenge involved a 131-mile driverless vehicle race in the Mojave Desert to promote autonomous vehicle research and development.

Although none of the vehicles successfully completed the challenge, the event sparked significant interest and investment in self-driving technology. Companies and research institutions began to explore and refine the necessary technologies, such as computer vision, sensor fusion, and machine learning algorithms, to enable safe and reliable autonomous driving.

Fast forward to the present, and self-driving cars have become a reality. Several companies, including Tesla, Waymo (a subsidiary of Alphabet Inc.), and Uber, have significantly progressed in developing autonomous vehicle technology. While fully self-driving cars are not yet ubiquitous on public roads, significant strides have been made in achieving higher levels of autonomy, with semi-autonomous features already available in many modern vehicles.

In addition to the advancements and progress made in autonomous vehicle technology, a significant milestone in the development of self-driving cars occurred in 2010 with the introduction of the Google Self-Driving Car.

Google, now known as Waymo, initiated its self-driving car project in 2009, aiming to develop a fully autonomous vehicle capable of navigating public roads. The project used advanced sensors, artificial intelligence, and machine learning algorithms to create a safe and efficient self-driving car system.

The Google Self-Driving Car, equipped with various sensors, including radar, lidar, and cameras, was designed to perceive its surroundings and make real-time driving decisions. Extensive testing and data collection were conducted to refine the self-driving technology and ensure its safety and reliability.

Over the years, Google/ Waymo’s self-driving cars underwent rigorous testing on both private tracks and public roads, accumulating millions of autonomous miles. This allowed the company to gather invaluable data, identify potential challenges, and improve the technology.

Waymo’s self-driving car with cameras on the top

The introduction of the Google Self-Driving Car generated widespread interest and accelerated the development of self-driving technology across the industry. It sparked collaborations between automakers, technology companies, and research institutions, all working towards achieving the vision of safe and fully autonomous driving.

Since then, numerous companies have joined the race to develop self-driving cars, investing in research, development, and testing. The technology has advanced significantly, with companies like Tesla, Uber, and traditional automakers also making notable strides in autonomous vehicle development.

Self-driving cars have the potential to revolutionize transportation, offering benefits such as increased safety, improved traffic flow, and enhanced accessibility. Ongoing research and development efforts continue to push the boundaries of autonomous driving technology, bringing us closer to a future where self-driving cars are ordinary on our roads.

3D Printing

The concept of 3D printing, also known as additive manufacturing, originated in the 1980s. While it may not have been directly inspired by science fiction, the idea of creating objects layer by layer has been explored in various futuristic narratives. However, the real-life development and popularization of 3D printing can be traced back to the early 2000s.

While 3D printing technology predates its portrayal in Star Trek, the popular science fiction franchise did showcase a similar concept known as “replicators.” In the Star Trek universe, replicators were advanced devices capable of creating various objects and food items on demand.

Introduced in the original Star Trek series in the 1960s, replicators were depicted as sophisticated machines that used molecular synthesis to transform raw matter into desired objects. They played a significant role in the futuristic society depicted in Star Trek, providing a convenient way for characters to instantaneously obtain food, tools, and other items.

The concept of replicators in Star Trek certainly shares similarities with 3D printing. Both involve the creation of physical objects through a layer-by-layer process. However, it is essential to note that the technology behind real-life 3D printing was independently developed in the 1980s and did not draw direct inspiration from Star Trek or replicator technology.

Nevertheless, the depiction of replicators in Star Trek helped popularize the idea of on-demand object creation and contributed to the public’s understanding and fascination with the concept. Today, 3D printing has become a reality, enabling us to bring digital designs to life by building objects layer by layer. However, it operates on different principles than the fictional replicators of Star Trek.

The technology behind 3D printing was initially conceived by Charles Hull in the early 1980s. Hull, an American engineer, invented and patented a process called stereolithography, which involved using a laser to solidify liquid photopolymer layer by layer to create a three-dimensional object. This marked the birth of 3D printing as a viable manufacturing technique.

The very first 3D printer in 1980s

In the following years, other additive manufacturing processes were developed, including selective laser sintering (SLS) and fused deposition modeling (FDM), further expanding the possibilities of 3D printing.

The real breakthrough for 3D printing came in the 2000s when the technology became more accessible and affordable. This allowed a more comprehensive range of industries and individuals to explore its potential applications. As the technology advanced, it found applications in rapid prototyping, industrial manufacturing, healthcare, aerospace, and consumer use.

The term “3D printing” gained popularity around 2005 when it started to enter the mainstream consciousness. Since then, 3D printers have become more affordable, compact, and user-friendly, making them accessible to hobbyists, educators, and small businesses.

The widespread adoption of 3D printing was facilitated by the expiration of certain essential patents in the early 2010s. This led to increased competition and innovation in the industry, resulting in a broader range of 3D printers, materials, and applications.

Today, 3D printing technology continues to evolve and improve. It has revolutionized various industries, allowing rapid prototyping, customization, and on-demand production. From manufacturing complex parts to creating intricate designs, 3D printing has become integral to modern production processes. It has opened up new possibilities for design, innovation, and manufacturing across the globe.

Bionic Limbs

The concept of bionic limbs as prosthetic replacements with enhanced capabilities can be traced back to science fiction literature and media. One notable example is the “Six Million Dollar Man” television series, which aired from 1973 to 1978. In the show, the main character, Steve Austin, becomes a cyborg with bionic limbs after a near-fatal accident. These bionic limbs grant him superhuman strength and agility.

Although bionic limbs were initially a work of fiction, the idea of creating advanced prosthetic limbs that can restore or enhance human capabilities has long been a goal in medicine and engineering. Over time, advancements in technology and biomedical engineering have made significant strides toward making bionic limbs a reality.

The first practical implementation of bionic limbs occurred in the late 20th century. In the 1990s, the development of myoelectric prosthetics gained traction. Myoelectric prosthetics use electrical signals generated by the wearer’s muscles to control the movements of the artificial limb. These prosthetics allowed for more natural and intuitive control than traditional prosthetic designs.

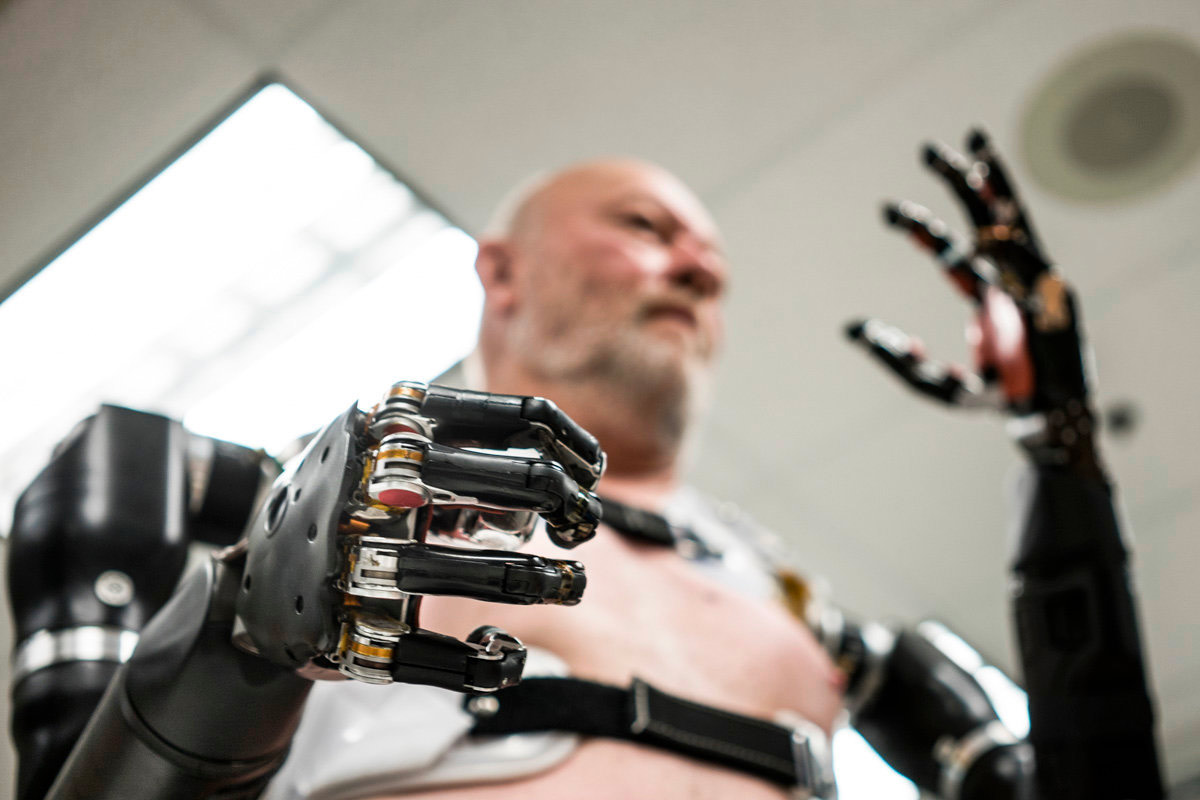

Mr. Baugh is testing a robotic prosthetic

Since then, materials, robotics, and neuro-engineering advancements have refined bionic limb technology. Introducing more sophisticated sensors, actuators, and control systems has made bionic limbs increasingly lifelike and functional.

In recent years, significant progress has been made in developing bionic limbs with advanced features like sensory feedback and mind-controlled operation. Researchers continue to push the boundaries of bionic limb technology, aiming to create prosthetics that can restore a wide range of human functions and provide a better quality of life for individuals with limb loss or limb impairment.

Antidepressants

Antidepressants do not have a specific origin in science fiction literature or media as medications used to treat depression and other mental health conditions. They are a product of scientific and medical advancements in psychiatry. However, Aldous Huxley’s dystopian novel “Brave New World,” published in 1932, explores a society where individuals are conditioned and controlled to maintain a superficial state of happiness through a drug called “soma.”

While soma in the novel can be seen as a form of escapism and a mood-altering substance, it does not directly align with the real-world antidepressant medications we have today. Soma serves as a tool for societal control, inducing temporary euphoria and numbing negative emotions.

In the present world, the development of antidepressant medications was not directly influenced by the concept of soma in “Brave New World.” Instead, the discovery and advancement of antidepressants followed scientific research and clinical trials to understand and treat depression.

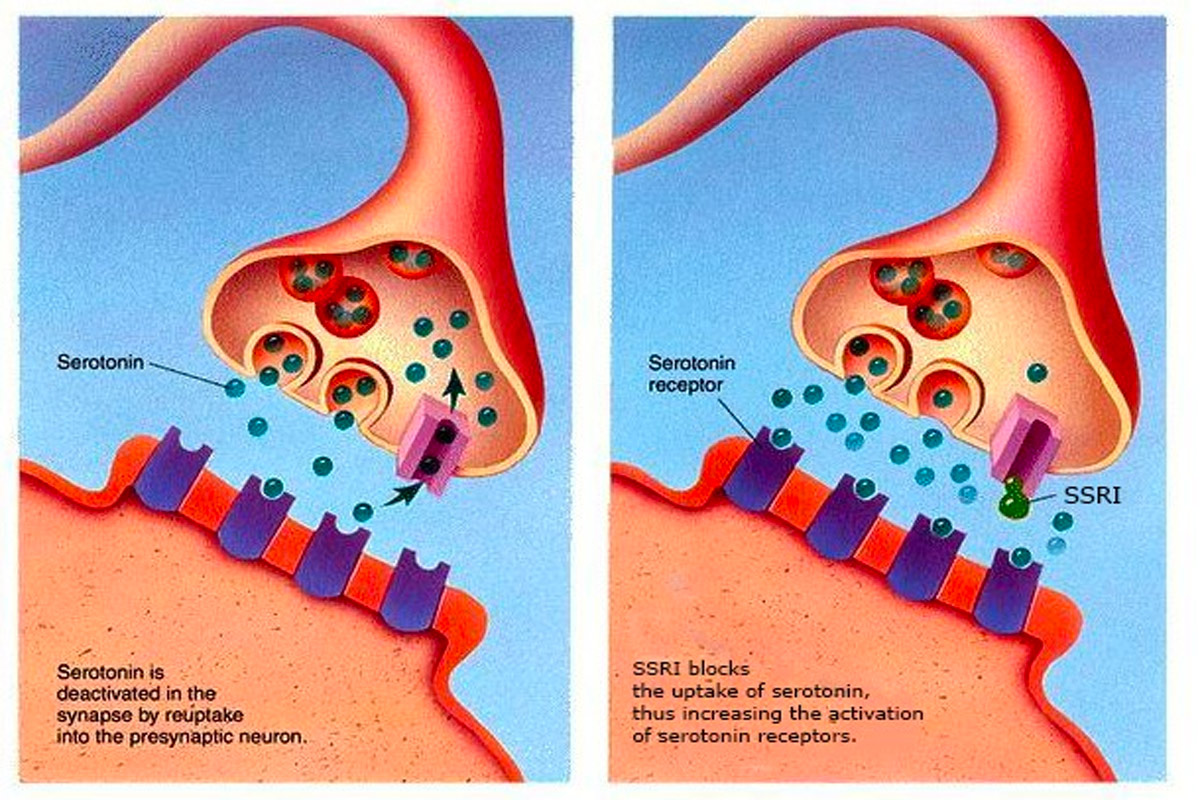

As mentioned earlier, the origins of modern antidepressant medications can be traced back to the mid-20th century. The introduction of tricyclic antidepressants (TCAs) in the late 1950s marked a significant breakthrough in the treatment of depression. This was followed by the development of selective serotonin reuptake inhibitors (SSRIs) in the 1980s, which became widely prescribed due to their efficacy and tolerability.

Antidepressants are prescribed by qualified healthcare professionals to help manage depressive symptoms, anxiety disorders, and other mental health conditions. These medications influence the balance of neurotransmitters in the brain, such as serotonin, norepinephrine, and dopamine, to improve mood and alleviate symptoms.

It’s important to note that antidepressant medications are not a one-size-fits-all solution, and their use should be determined by a healthcare provider based on an individual’s specific needs and circumstances. They are often used with therapy, lifestyle changes, and other forms of support to provide comprehensive treatment for mental health conditions.

The understanding and treatment of depression have evolved over time, starting with early observations and theories in psychology. However, the development of modern antidepressant medications can be traced back to the mid-20th century.

In the 1950s and 1960s, researchers discovered the therapeutic effects of certain drugs in alleviating symptoms of depression.

One of the first classes of antidepressants to be developed was the tricyclic antidepressants (TCAs), with imipramine being the first TCA introduced in the late 1950s. These medications targeted the reuptake of certain neurotransmitters in the brain to help regulate mood.

Following the TCAs, other classes of antidepressant medications were developed, including selective serotonin reuptake inhibitors (SSRIs) in the 1980s. SSRIs, such as fluoxetine (Prozac), became widely prescribed due to their effectiveness and fewer side effects than earlier antidepressant medications.

How SSRIs work (scheme)

The discovery and development of antidepressants were driven by a better understanding of the neurochemical imbalances contributing to depression and related mental health conditions. Through ongoing research and clinical trials, new classes of antidepressant medications continue to be developed, providing patients with more options and improving treatment outcomes.

It’s worth noting that while antidepressant medications have significantly contributed to the management of depression, they are not a cure-all solution. They are most effective when combined with therapy, lifestyle changes, and other forms of support. If you or someone you know is experiencing depression or mental health concerns, seeking professional help from a qualified healthcare provider is vital.

Invisible Cloaks

The concept of invisible cloaks, or garments that render the wearer unseen, is a popular trope in science fiction and folklore, often associated with the idea of camouflage or supernatural abilities. While the idea of invisibility has been explored in ancient myths and legends, its depiction in literature and popular culture has shaped the modern concept of invisible cloaks.

One of the earliest mentions of an invisible cloak can be found in Greek mythology, where Hades, the god of the underworld, possesses a helmet that grants invisibility to its wearer. This concept was further popularized by J.R.R. Tolkien’s fantasy novel “The Hobbit” (1937) and “The Lord of the Rings” trilogy (1954-1955), in which Frodo and other characters use the One Ring to become invisible. The One Ring possesses the power to render its wearer invisible when worn. This ability plays a significant role in the adventures of Frodo Baggins and other characters’ quest to destroy the Ring.

While the concept of an invisible cloak in Tolkien’s works is presented as a magical artifact rather than a technological invention, it has captured the imagination of readers and inspired the idea of becoming unseen or unnoticed.

In terms of technological invisibility cloaks, they gained significant attention in the early 21st century. In 2006, researchers at

Duke University demonstrated the first working prototype of a cloaking device that utilized metamaterials to manipulate light waves. This groundbreaking experiment generated excitement and sparked further research in optical cloaking.

In 2008, researchers at the University of California, Berkeley, developed a “metamaterial” material that could bend light around objects, rendering them invisible to specific wavelengths. This marked an important step toward creating practical applications for invisibility cloaks.

Metamaterial in testing enviroment

Since then, there have been numerous advancements in metamaterials and optical cloaking, with scientists exploring different approaches such as nanotechnology, plasmonics, and photonic crystals. While complete invisibility is yet to be achieved, these developments have shown promise in creating devices that can manipulate light and make objects partially invisible or blend into their surroundings.

It is important to note that the current state of invisibility cloak technology is far from the fictional depictions seen in science fiction movies or books. Practical applications are still limited, and the cloaking effects are typically limited to specific wavelengths or angles of light.

Nevertheless, ongoing research in invisibility cloaks continues to push the boundaries of what is possible. While we may have yet to fully functional invisible cloaks as depicted in science fiction, progress in this area opens up exciting possibilities in areas such as camouflage, optical illusions, and advanced optics.

Telepresence Robots

The concept of telepresence robots as a sci-fi invention can be traced back to various works of science fiction. One notable example is “Waldo” by Robert A. Heinlein, published in 1942. In this story, teleoperated mechanical hands called “waldoes” are used to manipulate objects remotely.

The term “telepresence” itself was coined by Marvin Minsky in his 1980 book “Telepresence.” Minsky envisioned a future where people could use technology to project their presence remotely and interact with distant environments.

The first practical implementation of telepresence robots came to life in the late 1990s and early 2000s. Companies like iRobot and Anybots introduced early versions of telepresence robots that allowed users to remotely navigate and interact with their surroundings through a live video feed and audio communication. These robots were primarily used in business and educational settings to facilitate remote collaboration and communication.

iRobot Telepresence Robots – AVA 500 model

However, it was in the 2010s that telepresence robots gained more attention and widespread use. Advancements in technology, including improved video quality, better mobility, and more intuitive user interfaces, contributed to their increased popularity.

Telepresence robots have applications in various fields, including healthcare, education, business, and even personal use. They enable remote workers to participate in meetings and interact with colleagues, allow students to attend classes from remote locations, facilitate telemedicine by providing doctors with a virtual presence, and assist individuals with limited mobility to explore and engage with the world around them.

With the advancements in robotics, artificial intelligence, and communication technologies, telepresence robots are continually evolving. Today, we have more sophisticated models with advanced features like autonomous navigation, multi-modal interaction, and improved agility.

In conclusion, while the concept of telepresence robots can be traced back to science fiction works like “Waldo,” their practical implementation emerged in the late 1990s and early 2000s. Since then, they have gained popularity and found applications in various industries. Ongoing technological advancements are likely to further enhance the capabilities and functionalities of telepresence robots, expanding their potential applications and impact in the future.

Defibrillator

The concept of defibrillator robots, which combine the life-saving capabilities of defibrillators with robotic technology, can be traced back to science fiction literature and media. While defibrillators themselves have been around since the mid-20th century, the idea of robotic devices designed explicitly for administering defibrillation is a more recent development.

In Mary Shelley’s novel “Frankenstein,” published in 1818, the defibrillator is not explicitly described as a medical device. However, the concept of reviving life using electricity, which is central to the story, laid the foundation for the development of defibrillation technology.

The idea of using electricity to restore normal heart rhythm can be traced back to the late 18th century when Luigi Galvani conducted experiments with electrical stimulation on frog legs. These early experiments paved the way for understanding the role of electricity in stimulating muscle contractions, including those of the heart.

One notable example of science-fiction defibrillator robots is the medical droid known as “FX-7” in the Star Wars universe. FX-7 is depicted as a small, autonomous droid equipped with medical tools, including a defibrillator, used to provide emergency medical assistance to injured characters.

The modern defibrillator, as we know it today, emerged in the mid-20th century. In 1947, an American cardiac surgeon, Dr. Claude Beck, successfully revived a patient using an external defibrillator. This event marked a significant milestone in the development of defibrillation technology.

In the real world, the development of defibrillator robots can be attributed to advancements in medical technology and robotics. Automated external defibrillators (AEDs) have been widely available since the 1980s, allowing non-medical professionals to administer defibrillation during cardiac emergencies.

Automated external defibrillator semi-automatic

However, integrating robotic capabilities into AEDs took shape more recently. Researchers and engineers have explored the use of robotic systems to autonomously locate and reach patients in need of defibrillation, particularly in public spaces and large-scale events where response time is critical.

While fully autonomous defibrillator robots are still in the early stages of development, some notable advancements have been made. For example, in 2013, a team of researchers at the University of Illinois at Chicago created a prototype of a robotic system capable of navigating independently to a simulated cardiac arrest victim and delivering a defibrillation shock.

In the present world, defibrillator robots have yet to be widely deployed or commercially available. However, ongoing research and development efforts aim to improve the capabilities of these robots, such as integrating artificial intelligence for improved navigation and decision-making.

The potential benefits of defibrillator robots lie in their ability to provide timely and accurate defibrillation, especially in environments where the human response may be delayed or limited. They have the potential to enhance emergency medical services and increase the chances of survival for individuals experiencing sudden cardiac arrest.

In summary, while the concept of defibrillator robots originated in science fiction, the real-world development of these devices is still in progress. Ongoing research and technological advancements aim to create robotic systems that can autonomously locate, reach, and administer defibrillation to individuals in need, ultimately saving more lives.

Smartwatches

Smartwatches, as we know them today, are not typically considered robots. However, I can provide some information if you are interested in wearable devices with advanced functionalities, including communication and interaction capabilities.

The idea of a wrist-worn device with advanced features can be traced back to various science fiction works. One notable example is the comic strip character Dick Tracy, created by Chester Gould in 1931. Dick Tracy was often depicted using a two-way wrist radio, a communication device that he wore like a wristwatch.

In the Dick Tracy comic strip, which debuted in 1931, the protagonist, a detective named Dick Tracy, wore a wrist-mounted communication device called the two-way wrist radio. This device allowed him to communicate with others and receive updates while on the go. It was a wearable radio that provided a means of communication without the need for traditional handheld devices.

The two-way wrist radio in Dick Tracy inspired the concept of a wrist-worn communication device with advanced features. Although the functionality of the two-way wrist radio was limited to voice communication in the comic strip, it laid the foundation for the idea of a wearable device that could offer more than just timekeeping.

In science fiction, the concept of advanced wearable devices continued to evolve. Numerous books, movies, and TV shows featured characters using wrist-worn devices with communication, computation, and data display capabilities. These fictional portrayals laid the groundwork for the concept of smartwatches.

The actual development of smartwatches began in the late 20th century and gained significant momentum in the 21st century. Early attempts at creating wrist-worn computing devices can be traced back to the 1980s and 1990s. For example, the Seiko RC series watches in the 1980s had essential calculator functions.

Seiko RC series smartwatches first concept

The turning point for smartwatches came with the advancements in mobile technology, particularly with the introduction of smartphones. Companies started exploring connecting smartphones with wearable devices to offer enhanced features and convenience.

One notable milestone in the history of smartwatches is the release of the “Pebble Smartwatch” in 2013. The Pebble Smartwatch gained attention for its e-paper display, smartphone connectivity, and ability to run third-party applications. It became a popular device among early adopters and helped pave the way for the modern smartwatch market.

Following the success of the Pebble Smartwatch, several major tech companies entered the smartwatch market. In 2014, Google introduced the Android Wear platform, which allowed various manufacturers to create smartwatches running on the Android operating system. Apple unveiled the Apple Watch in 2015, marking its entry into the smartwatch market and bringing significant attention to the category.

Since then, smartwatches have continued to evolve, offering a wide range of features such as fitness tracking, heart rate monitoring, GPS navigation, mobile payments, and app integration. They have become popular accessories for individuals seeking convenient access to notifications, communication, health monitoring, and other functionalities on their wrists.

In conclusion, while the concept of wrist-worn devices with advanced capabilities can be traced back to science fiction works like Dick Tracy, the development of modern smartwatches began in the late 20th century and gained significant momentum in the 21st century. The integration of mobile technology, advancements in miniaturization, and the desire for enhanced convenience and functionality have propelled smartwatches into the mainstream market, making them a popular accessory for many people today.

Voice-Activated Assistants

The concept of voice-activated assistants, also known as virtual assistants or voice assistants, originates in science fiction literature and media. One notable early depiction can be found in the science fiction TV series “Star Trek,” which introduced the character of the ship’s computer known as “Computer” or “Computer Voice.” This intelligent computer system could understand and respond to voice commands, providing information and carrying out tasks for the crew.

The idea of voice-activated assistants gained further popularity and recognition after the science fiction film “2001: A Space Odyssey” was released in 1968. The film featured a highly advanced computer system named “HAL 9000” that communicated with the crew using voice commands and natural language.

In the real world, the development of voice-activated assistants began in the late 20th century. It gained significant momentum in the 21st century with advancements in artificial intelligence and natural language processing technologies. One of the notable milestones in this regard is the launch of Apple’s Siri in 2011. Siri, a voice-activated personal assistant, allows users to interact with their iPhones using natural language commands, performing tasks, and answering questions.

Apple’s Siri logos

Following Siri’s introduction, other major technology companies, including Amazon with Alexa, Google with Google Assistant, and Microsoft with Cortana, released their voice-activated assistant platforms. These voice assistants can understand and respond to voice commands, provide information, perform tasks, and control smart home devices, among other functionalities.

Voice-activated assistants have since become integral to our everyday lives, extending beyond smartphones to other devices like smart speakers, smart TVs, and even cars. They have revolutionized how we interact with technology, offering hands-free convenience and personalized assistance.

Augmented Reality

Augmented Reality (AR) has its roots in science fiction literature and has made significant strides to become a reality in the present world. The concept of augmenting the real world with digital information and virtual elements has fascinated storytellers for decades.

One of the earliest references to augmented reality can be found in the 1901 novel “The First Men in the Moon” by H.G. Wells. In the story, the characters wear unique spectacles that enable them to see holographic projections, providing an augmented view of their surroundings.

The term “augmented reality” was coined in the 1990s by Tom Caudell, a researcher at Boeing, who used it to describe a digital display system that aided in aircraft assembly. However, it was in the early 2000s that augmented reality gained significant attention and became commercially viable.

One key milestone in the development of augmented reality was the release of the smartphone app “Layar” in 2009. Layar allowed users to view digital information overlapped on top of real-world scenes captured by smartphone cameras. This marked the beginning of widespread AR experiences accessible through everyday devices.

smartphone app “Layar” in 2009, first look

In 2016, the release of the mobile game “Pokémon Go” brought augmented reality into the mainstream. The game allowed players to see and capture virtual Pokémon creatures overlaid in real-world environments using their smartphones. This demonstrated the immense potential and widespread appeal of AR technology.

Since then, augmented reality has continued to evolve and find applications in various fields. AR has transformed how we interact with digital content and our physical surroundings, from entertainment and gaming to education, retail, healthcare, and architecture. Companies like Apple with its ARKit and Google with its ARCore have developed powerful AR platforms that enable developers to create immersive AR experiences for smartphones and other devices.

Augmented reality is experienced through special glasses, smartphone screens, headsets, or other display devices. By combining computer vision, motion tracking, and digital content rendering, AR overlays virtual elements onto the real world, enhancing our perception and interaction with the environment.

As technology progresses, augmented reality is expected to become even more sophisticated, seamless, and integrated into our daily lives. From interactive educational tools to practical real-time information displays and immersive gaming experiences, augmented reality can reshape how we perceive and interact with the world around us.

Hoverboards

Hoverboards are a famous sci-fi invention that has captured the imagination of many. The concept of hoverboards can be traced back to science fiction literature and films, where they are often depicted as levitating platforms that allow people to travel effortlessly above the ground.

One of the earliest references to hoverboards can be found in the science fiction film “Back to the Future Part II,” released in 1989. In this movie, the main character, Marty McFly, travels to the future and encounters a skateboard-like device that hovers above the ground.

However, it’s important to note that the hoverboards depicted in “Back to the Future Part II” were purely fictional and created for entertainment.

In reality, developing genuine hoverboards has been a significant engineering challenge. While there have been various attempts to create functional hoverboard prototypes, they have yet to become widely available as a means of transportation.

One notable attempt at creating a hoverboard-like device was the Hendo Hoverboard, unveiled in 2014 by Arx Pax. This device used magnetic levitation technology to hover above specially-designed surfaces. However, its practicality could have been improved due to the need for specific infrastructure and the short battery life.

Hendo Hoverboard, unveiled in 2014 by Arx Pax, prototype

Since then, there have been advancements in personal transportation devices that incorporate self-balancing technology, resembling the appearance of hoverboards. These devices, known as self-balancing or electric scooters, feature two wheels and gyroscopic sensors that help users maintain balance while riding.

While these self-balancing scooters have gained popularity, it’s important to note that they do not indeed hover above the ground like the fictional hoverboards depicted in science fiction. Instead, they rely on wheels to move and maintain stability.

Although fully functional hoverboards similar to those seen in science fiction are not yet available, ongoing research and technological advancements may bring us closer to realizing this concept.

Jetpacks

Jetpacks, the iconic devices that allow individuals to fly through the air, have long been a staple of science fiction. The concept of jetpacks can be traced back to the early 20th century, with notable appearances in various sci-fi works.

One of the earliest mentions of a jetpack-like device is in the 1928 pulp magazine series “Buck Rogers.” The protagonist, Buck Rogers, uses a “rocket belt” to navigate the skies. This portrayal captured the imagination of readers and helped popularize the idea of personal flight with jetpacks.

In the 1960s, the concept of jetpacks gained further attention with the introduction of the “Jetsons” animated television series. The show featured characters flying around using personal jetpacks, showcasing a futuristic transportation vision.

The first real-world attempt at developing a functional jetpack came in the 1960s with the Bell Rocket Belt. Developed by Bell Aerosystems, the Rocket Belt was a rocket-powered device worn on the back that allowed for vertical takeoff and limited flight. However, it had limited range and flight time, making it impractical for widespread use.

In 1999, Jetpack International released the JB-10, a significant milestone in the development of jetpack technology.

The JB-10 jetpack was groundbreaking, offering improved performance and functionality compared to previous attempts. It was designed to be lightweight, portable, and capable of sustained flight. The device utilized hydrogen peroxide as a fuel, which produced high-pressure steam for thrust when combined with a catalyst.

With the JB-10, individuals could experience the exhilaration of personal flight for extended durations. It could reach altitudes of up to 250 feet (76 meters) and achieve speeds of approximately 68 miles per hour (110 kilometers per hour). The jetpack was controlled through handgrips, allowing users to steer and maneuver in the air.

The release of the JB-10 jetpack generated significant excitement and marked a major step forward in bringing the concept of personal flight to reality. It showcased the potential for jetpacks for transportation, recreation, and adventure.

Since then, Jetpack International has continued to refine and innovate its jetpack designs. They have introduced newer models with enhanced performance and safety features. These jetpacks have been utilized for various purposes, including aerial displays, entertainment events, and promotional activities.

While jetpacks like the JB-10 have demonstrated the possibility of personal flight, their practical application still needs to be improved. Factors such as fuel consumption, flight time, and regulatory considerations have posed challenges to their widespread use as a mainstream mode of transportation.

Nonetheless, the release of the JB-10 in 1999 served as a significant milestone in jetpack technology’s evolution, capturing people’s imaginations worldwide and inspiring further advancements in the field. It showcased the potential for personal flight and paved the way for continued research and development in pursuit of safer, more efficient, and commercially viable jetpack solutions.

jetpack JB-10 in 1999 on test

While jetpacks have not yet become a standard mode of transportation in the present world, they have found niche applications in search and rescue operations, aerial stunts, and military uses. Additionally, experimental prototypes and concept designs are continuously being developed, pushing the boundaries of personal flight.

The quest for practical and commercially viable jetpacks continues, with ongoing research and development efforts to improve performance, safety, and efficiency. Although the full realization of widespread jetpack use remains a challenge, the concept of personal flight with jetpacks has made significant progress since its sci-fi origins, captivating imaginations and inspiring continued innovation in aviation.

Holograms

The concept of holograms as a sci-fi invention can be traced back to various works of fiction. Still, one notable early depiction is in the novel “Foundation” by Isaac Asimov, published in 1951. In the book, holograms are described as three-dimensional images projected in mid-air.

Hungarian-British physicist Dennis Gabor first proposed holography as a scientific concept in 1947. He developed the theory of holography, which involves recording and reconstructing the interference pattern of light waves to create three-dimensional images. Gabor’s work laid the foundation for the development of practical holography.

The first functional holograms were created in the 1960s using lasers. In 1962, Yuri Denisyuk, a Soviet physicist, developed a method called white-light transmission holography, which allowed for the recording and reconstruction of full-color, three-dimensional holographic images. This breakthrough opened up new possibilities for holography and brought it closer to the depictions seen in science fiction.

Significant progress in holographic displays was made in 1997 when researchers at the University of Texas at Austin created the first 3D display. This achievement marked a milestone in the development of holographic technology.

Zelensky speaks at Viva Technology conference in form of hologram

The researchers at the University of Texas developed a technique known as electro-holography, which involved using a liquid crystal display (LCD) and a spatial light modulator to generate holographic images. This breakthrough allowed for the creation three-dimensional holograms that could be viewed from different angles without needing special glasses or devices.

The 3D holographic display created at the University of Texas was a significant step forward in making holography more practical and accessible. It opened up new possibilities for applications in fields such as entertainment, advertising, and scientific visualization.

The advancement of holographic technology continued in the following decades. Techniques such as reflection and rainbow holography were developed, enabling the creation of more realistic and visually impressive holograms.

In the present world, holograms have become a reality, although they are less advanced or widespread than some science fiction depictions may suggest. Holographic displays and projections are used in various applications, such as art installations, advertising, and entertainment.

For example, holographic displays have been employed in concerts and live performances to create visually stunning effects. Additionally, holographic technology has found applications in fields like medicine, engineering, and scientific visualization, where three-dimensional representations can aid in understanding complex structures or data.

While actual holographic displays that can be viewed from multiple angles without the need for special glasses or devices are still under development, advancements in holography continue to push the boundaries of what is possible. Researchers are working on techniques such as digital holography and volumetric displays to bring us closer to the immersive holographic experiences depicted in science fiction.

Overall, the origin of holograms as a sci-fi invention can be traced back to early works of fiction, and the development of practical holography began in the 1960s. Since then, holograms have gradually become a reality, finding applications in various fields and bringing us closer to the futuristic depictions seen in science fiction literature and films.

Robotics and Androids

The concept of robotics androids, or human-like robots, has a long history in science fiction literature and mythology. The idea of artificial beings that mimic or surpass human capabilities has fascinated people for centuries.

One of the earliest references to artificial beings can be found in ancient mythology, such as the story of Pygmalion in Greek mythology, where a sculptor creates a statue that comes to life. However, the modern concept of robots and androids began to take shape in science fiction literature during the 19th and early 20th centuries.

One notable early example is the play “R.U.R.” (Rossum’s Universal Robots) by Karel Čapek, first performed in 1920. In this play, the term “robot” was introduced and referred to artificial beings created to serve humanity. The robots in Čapek’s play appeared humanoid and performed laborious tasks.

As for when robots and androids came to life in the real world, significant advancements in robotics began in the mid-20th century. In 1954, George Devol invented the first industrial robot, the Unimate, used for tasks like handling hot metal parts in factories. This marked the beginning of practical robotic applications in industries.

In 1997, Honda unveiled ASIMO, which stands for Advanced Step in Innovative Mobility. ASIMO is a humanoid robot designed to resemble a tiny astronaut and showcase advanced robotics technology.

ASIMO was a significant milestone in robotics as it demonstrated impressive capabilities such as walking on two legs, climbing stairs, and running. It was equipped with advanced sensors, cameras, and sophisticated software that allowed it to navigate its surroundings and interact with humans.

ASIMO’s development was driven by Honda’s vision to create a robot to assist and support humans in various tasks, such as helping older people or working in hazardous environments. While ASIMO’s abilities were impressive for its time, it should be noted that it was primarily a research and development platform rather than a commercially available product.

Since then, robotics has advanced rapidly, using robots in various fields such as manufacturing, healthcare, space exploration, and more. In recent years, there have been significant advancements in humanoid robotics, with robots becoming more sophisticated in their appearance and capabilities.

Ameca – one of the most modern and human-like robots

While we do not yet have fully autonomous androids that closely resemble humans, as in science fiction, robotics technology continues progressing, and researchers are continually working towards creating more advanced and capable robots.

Space Tourism

Space tourism, or the idea of ordinary individuals traveling to space for recreational purposes, has its roots in science fiction literature and media. Science fiction stories have long imagined a future where space travel becomes accessible to the general public, allowing people to experience the wonders of space firsthand.

One notable early mention of space tourism can be found in the works of Jules Verne. His novel “From the Earth to the Moon,” published in 1865, features a group of individuals who embark on a journey to the moon aboard a spacecraft. While the story’s primary focus is not tourism per se, it touches upon the idea of humans venturing into space for exploration and adventure.

In terms of real-world implementation, the first significant steps toward space tourism began in the early 2000s. In 2001, the American entrepreneur Dennis Tito became the first private individual to travel to space as a tourist. He paid a considerable sum to the Russian space agency and embarked on an eight-day International Space Station (ISS) trip.

This milestone paved the way for the development of commercial space tourism as a viable industry. In subsequent years, several companies emerged to offer space travel experiences to paying customers. One notable company is Virgin Galactic, founded by Richard Branson in 2004, which aims to provide suborbital spaceflights for tourists.

SpaceX, led by Elon Musk, has also been advancing space tourism. They have announced plans to send private individuals on trips around the moon and even to other destinations in the solar system.

SpaceX first tourist crew

As technology and infrastructure continue to improve, space tourism is expected to become more accessible to a broader range of individuals, eventually fulfilling the sci-fi vision of ordinary people journeying into space for leisure and exploration.

Gesture-Based Interfaces

Gesture-based interfaces, which allow users to interact with digital systems using hand movements and gestures, originate in science fiction literature and films. The idea of controlling devices or interacting with virtual interfaces through gestures has been a recurring theme in futuristic depictions.

Gesture-Based Interfaces in medicine

One early example of gesture-based interfaces can be found in the science fiction film “Minority Report,” released in 2002, directed by Steven Spielberg and based on a short story by Philip K. Dick. In the movie, characters manipulate digital content by performing gestures in the air without the need for physical touch or traditional input devices.

While gesture-based interfaces were initially imagined in science fiction, they came to life in the real world in the early 2000s. In 2007, Apple introduced the iPhone, which featured a multi-touch screen allowing users to interact with the device using gestures such as swiping, pinching, and tapping.

However, The breakthrough for gesture-based interfaces came with the release of the Microsoft Kinect in 2010. The Kinect, initially developed as a gaming accessory for the Xbox console, used an array of sensors to track the movements and gestures of users, enabling them to control games and navigate menus without a traditional controller.

The Kinect utilized a combination of cameras, depth sensors, and microphones to track the movements and gestures of players. It allowed users to interact with games and navigate menus using their body movements without needing a physical controller. This marked a significant advancement in gesture-based interfaces, enabling a more intuitive and immersive gaming experience.

The Kinect sensor was not only limited to gaming but also found applications beyond the gaming industry. Developers and researchers began exploring its potential in fitness, healthcare, education, and even robotics. The ability to capture and interpret human gestures opened up new possibilities for user interaction and control in various domains.

Since then, gesture-based interfaces have become more prevalent and have found applications in various domains. They have been adopted in gaming, virtual reality systems, smart TVs, and even industrial settings, where gesture-based controls operate machinery or control robots.

Additionally, the rise of augmented and virtual reality technologies has further advanced gesture-based interfaces. Devices like the Oculus Quest and HTC Vive allow users to interact with virtual environments and manipulate objects using hand gestures, enhancing the immersive experience.

While gesture-based interfaces are still evolving and have some limitations, they have become integral to modern technology interfaces. As technology advances, we can expect further developments in gesture recognition and interaction, bringing us closer to the futuristic visions depicted in science fiction.

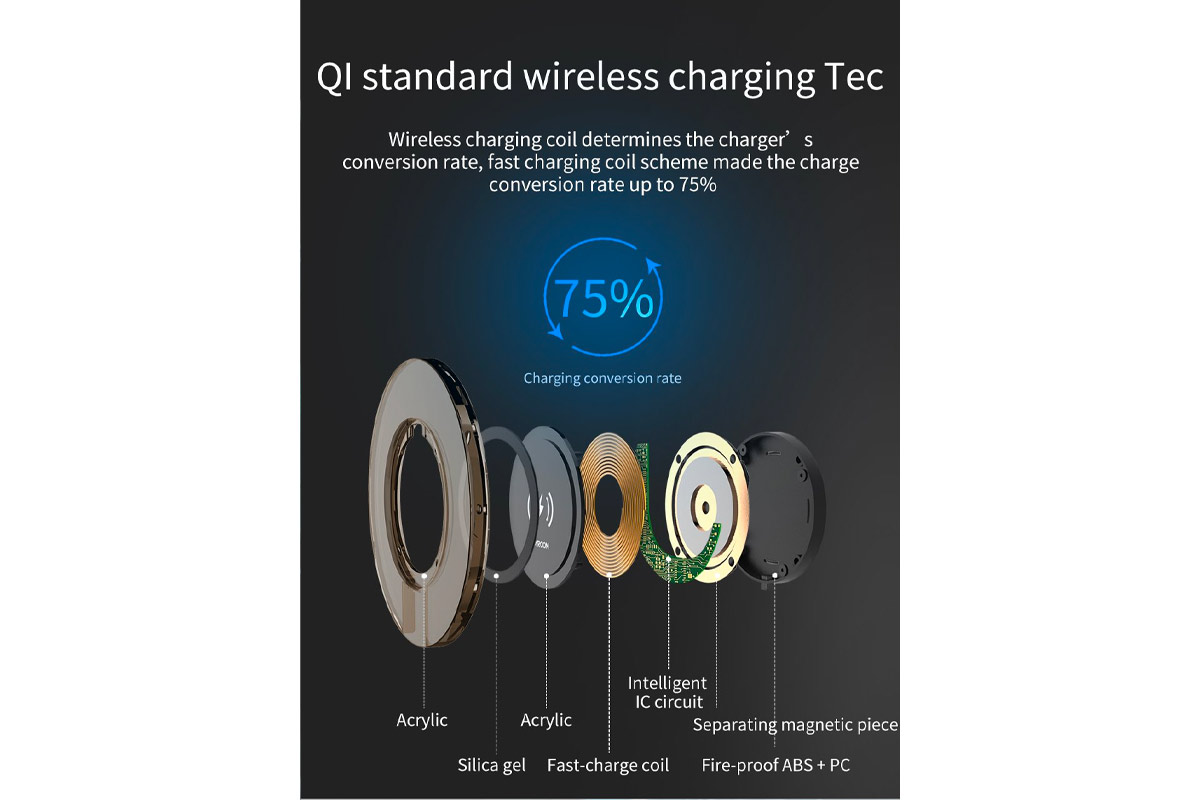

Wireless Charging

Wireless charging originated in science fiction, where it was depicted as a futuristic technology that eliminated the need for physical connectors and cords. One notable example is found in the works of Nikola Tesla, a renowned inventor and electrical engineer. In the early 20th century, Tesla envisioned a world where electricity could be transmitted wirelessly, powering devices without traditional wired connections.

While wireless charging existed in science fiction, its practical implementation took several decades to materialize. The first significant steps towards wireless charging were made in the 20th century. In the 1980s, researchers began exploring the concept of inductive charging, which uses electromagnetic fields to transfer power between a charging station and a device.

The breakthrough in commercializing wireless charging came in the early 2010s. In 2010, the Wireless Power Consortium (WPC) introduced the Qi standard, a global standard for wireless charging technology. This standardization paved the way for the widespread adoption of wireless charging in consumer electronics.

Qi standard technology

Since then, wireless charging has become increasingly prevalent, with many smartphones, tablets, and other portable devices incorporating the technology. Various industries, including automotive and healthcare, have also embraced wireless charging for their products.

It is worth noting that while wireless charging has become a reality, the technology still has limitations, including the need for close proximity between the charging device and the charger. However, ongoing advancements in wireless charging technology aim to address these limitations and further expand its applications in the future.

Biometric Identification

The concept of biometric identification, which involves using unique physiological or behavioral characteristics to identify individuals, has its roots in science fiction and real-world scientific exploration.

The idea of using biometric characteristics for identification in science fiction can be traced back to early works, such as the 1926 novel “The Science of Life” by H.G. Wells. Wells imagined a world where individuals could be identified through their fingerprints. This concept of using fingerprints as a form of identification later became a reality in forensics and law enforcement.

In the real world, the scientific exploration of biometric identification began in the late 19th century. Sir Francis Galton, a British scientist and cousin of Charles Darwin, conducted extensive research on fingerprints and their uniqueness. Galton’s work laid the foundation for the scientific study of biometrics.

The practical implementation of biometric identification came much later with advancements in technology. In the 1960s and 1970s, the development of automated fingerprint recognition systems began, allowing for faster and more accurate identification of individuals based on their fingerprints.

Other biometric modalities, such as iris and facial recognition, gained traction in the late 20th century. The first commercial iris recognition system was introduced in the 1990s, and facial recognition technology was deployed in various applications.

Today, biometric identification has become widespread, with applications in various industries, including law enforcement, border control, healthcare, and access control systems. It has significantly improved security and convenience in many aspects of our daily lives.

Solar Power and Renewable Energy

The concept of harnessing solar power and renewable energy sources originates in science fiction and real-world scientific exploration.

In science fiction literature, the idea of using solar power as a sustainable energy source can be traced back to early works. For example, in Jules Verne’s novel “The Mysterious Island,” published in 1874, the characters use solar power to generate electricity. Verne envisioned a future where solar energy would meet humanity’s energy needs.

In the real world, the exploration of solar power and renewable energy began in the 19th century. Scientists and inventors conducted experiments to harness the power of the sun and other renewable sources.

One notable milestone in solar power development was the invention of the photovoltaic effect by Alexandre-Edmond Becquerel in 1839. This discovery laid the foundation for developing solar cells, which convert sunlight into electricity.

Throughout the 20th century, scientists and engineers significantly advanced solar power technology. In the 1950s, the first practical solar cell was created, and in the following decades, improvements were made in efficiency and cost-effectiveness.

The widespread adoption of solar power and renewable energy began in the late 20th century and continues to grow today. Government incentives, technological advancements, and increasing environmental awareness have contributed to expanding solar power installations and developing other renewable energy sources like wind, hydroelectric, and geothermal power.

Geothermal power plant working

Today, solar panels can be found on rooftops, large-scale solar farms, and various devices. Renewable energy has become increasingly important as societies strive to reduce carbon emissions and transition to more sustainable energy sources.

Biometric Payments and Authentication

The concept of biometric payments and authentication, which involves using unique physiological or behavioral characteristics for identification and transaction purposes, can be traced back to various works of science fiction. However, the actual implementation and development of biometric technology in real-world applications took place over several decades.

Biometric identification and authentication can be seen in science fiction stories and movies as early as the mid-20th century, where characters use their fingerprints, retinal scans, or voice recognition to access secure areas or conduct financial transactions. These depictions sparked the imagination and interest in using biometric data for real-world applications.

In the late 20th century, significant progress was made in biometric technology and its practical implementation. The first notable development came with fingerprint recognition in law enforcement and forensic investigations. The automation and digitization of fingerprint-matching processes paved the way for its application in various industries, including financial services.

One of the earliest examples of biometric payments and authentication in the real world can be traced back to the 1990s. In 1997, the South Korean government introduced a fingerprint-based biometric system for their national ID card program. This marked one of the first large-scale biometrics implementations for identification and authentication purposes.

In the early 2000s, biometric technologies, including fingerprint and iris scanning, gained traction in the financial sector. Banks and financial institutions started exploring biometric authentication as a more secure and convenient method for customer verification. This led to the integration of biometric authentication into ATM machines, allowing customers to perform transactions using their fingerprints or palm prints.

Since then, biometric payments and authentication have continued to evolve and expand. Today, various biometric modalities, such as fingerprint, facial recognition, voice recognition, and even behavioral biometrics, are used in different payment systems and authentication methods.

Mobile devices, such as smartphones and tablets, have played a significant role in adopting biometric payments. Technologies like Apple’s Touch ID, Face ID, and Android’s fingerprint and facial recognition systems have popularized biometrics for secure mobile payments.

Furthermore, biometric payment cards, which incorporate fingerprint sensors directly on the card, have been introduced recently. These cards enable users to authenticate transactions by simply placing their finger on the card’s sensor, adding an extra layer of security and convenience.

As technology advances, biometric payments, and authentication are expected to become more prevalent in various industries. Fusing biometrics with other emerging technologies like artificial intelligence and blockchain further enhances security and privacy in digital transactions.

Overall, the journey of biometric payments and authentication from science fiction to reality has been driven by technological advancements, increased security demands, and the desire for more seamless user experiences in the digital age.

Internet of Things (IoT)

The concept of the Internet of Things (IoT) as a sci-fi invention can be traced back to various works of fiction depicting interconnected devices and objects communicating. However, IoT technologies’ actual development and implementation took place over several decades.